by Dan Lockton

In a meta-auto-behaviour-change effort both to keep me motivated during a very protracted PhD write-up and demonstrate that the end is in sight, I’m going to be publishing a few extracts from my thesis (mostly from the literature review, and before any rigorous editing) as blog posts over the next few weeks. It would be nice to think they might also be interesting brief articles in their own right, but the style is not necessarily blog-like, and some of the graphics and tables are ugly.

“It is now clear that we must take into account what the environment does to an organism not only before but after it responds. Behaviour is shaped and maintained by its consequences… It is true that man’s genetic endowment can be changed only very slowly, but changes in the environment of the individual have quick and dramatic effects.”

B.F. Skinner, Beyond Freedom and Dignity, 1971, p.24

Behaviourism as a psychological approach is based on empirical observation of human (and animal) behaviour–stimuli in the environment, and the behavioural responses which follow–and attempts in turn to apply stimuli to provoke desired responses. John B. Watson (1913, p.158), in laying out the behaviourist viewpoint, reacted against the then-current focus by Freud and others on unobservable concepts such as the processes of the mind: “Psychology as the behaviorist views it… [has as its] theoretical goal…the prediction and control of behavior. Introspection forms no essential part of its methods, nor is the scientific value of its data dependent upon the readiness with which they lend themselves to interpretation in terms of consciousness”.

Classical and operant conditioning

In an engineering sense, Watson’s behaviourism perhaps treats animals and humans as black boxes* (Sparks, 1982), whose truth tables can be elicited by comparing inputs (stimuli) and outputs (responses), without any attempt to model the internal logic of the system–an approach which Chomsky (1971) criticises. As Koestler (1967, p.19) put it–also heavily criticising the behaviourist view–“[s]ince all mental events are private events which cannot be observed by others, and which can only be made public through statements based on introspection, they had to be excluded from the domain of science.” However, learning (via conditioning) is inherent to behaviourism–both Watson’s and the later perspective of Skinner–which means that the black box is somewhat more complex than a component with fixed behaviour. Classical or respondent conditioning, of the kind explored with dogs by Pavlov (1927)–and often applied in behaviour change methods such as aversion therapy (as for example, the ‘Ludovico technique’ in Burgess’s novel A Clockwork Orange (1962))–repeatedly pairs two stimuli so that the reflex behaviour provoked by one also becomes provoked by the other.

Operant conditioning, as developed by B.F. Skinner (1953) via famous experiments with pigeons, rats and other animals, is essentially about consequences: it involves reinforcing (or punishing) certain behaviours (the operant) so that the animal (or person) becomes conditioned to behave in a particular way:

Operant conditioning, as developed by B.F. Skinner (1953) via famous experiments with pigeons, rats and other animals, is essentially about consequences: it involves reinforcing (or punishing) certain behaviours (the operant) so that the animal (or person) becomes conditioned to behave in a particular way:

“When a bit of behaviour is followed by a certain kind of consequence, it is more likely to occur again, and a consequence having this effect is called a reinforcer. Food, for example, is a reinforcer to a hungry organism; anything the organism does that is followed by the receipt of food is more likely to be done again whenever the organism is hungry. Some stimuli are called negative reinforcers: any response which reduces the intensity of such a stimulus–or ends it–is more likely to be emitted when the stimulus recurs. Thus, if a person escapes from a hot sun when he moves under cover, he is more likely to move under cover when the sun is again hot.” (Skinner, 1971, p.31-32)

It is important to note here that in Skinner’s terms, positive and negative reinforcement do not imply ‘good’ and ‘bad’, and negative reinforcement is a different concept to punishment. Positive reinforcement is giving a reward in return for particular behaviour; negative reinforcement is removing something unpleasant in return for particular behaviour. These are subtly different. Pryor (2002) gives the example of a car seatbelt warning buzzer as negative reinforcement–a device designed to be irritating or unpleasant enough to cause the user to take action to avoid it. We might consider that a recorded voice saying “Thank you” after the seatbelt is fastened could be a positive reinforcement alternative. Positive and negative punishment are essentially the inverse of each of these–a fine for not wearing a seatbelt while driving is a form of positive punishment, and taking away someone’s driving licence would be a form of negative punishment. Clicker training with animals such as dolphins and dogs (e.g. Pryor, 2002) arguably combines features of classical and operant conditioning, using an audible clicking device to help ‘mark’ particular behaviours immediately they occur, which can then be positively reinforced with treats–or the click itself can act as a reinforcer.

A major factor in operant conditioning is the schedule of reinforcement that occurs: variable schedules of reinforcement, where a reward occurs on an unpredictable schedule–either ratio (amount of behaviour required) or interval (time required)–can be particularly effective; as Skinner (1971, p. 39) notes, variable ratio scheduling is “at the heart of all gambling systems”. Pryor (2002, p. 22) comments that “[p]eople like to play slot machines precisely because there’s no predicting whether nothing will come out, or a little money, or a lot of money, or which time the reinforcer will come (it might be the very first time).” This principle is inherent in all games of chance–Schell (2008, p.153) recognises it as something a designer can work with explicitly: “a good game designer must become the master of chance and probability, sculpting it to his will, to create an experience that is always full of challenging decisions and interesting surprises.”

*A ‘black box’ approach to modelling human, animal and other system behaviour has also been discussed extensively within cybernetics, e.g. by Ashby (1956) and Bateson (1969).

Social traps

“Like their physical analogs, social traps are baited. The baits are the positive rewards which, through the mechanisms of learning, direct behavior along lines that seem right every step of the way but nevertheless end up at the wrong place. Complex patterns of reinforcement, motivation, and the structure of social situations can draw people into unpreferred modes of behavior, subjecting them to consequences that are not comprehended until it is too late to avoid them.”

Cross and Guyer, Social Traps, 1980, p.16-17

Platt (1973) and Cross and Guyer (1980) discuss ‘social traps’, situations in which there is both reinforcement which encourages a behaviour, but also a punishment or unpleasant consequences of some kind, affecting either the person involved or someone else, at some later point or in some other way. “The behavior that receives the green light becomes supplanted by or is accompanied by an unavoidable punishment…[C]igarette smoking provides a simple example: the gratification associated with smoking encourages future behavior of the same kind, while the painful illness associated with that same behavior does not occur until a point very distant in the future; and when, finally, the illness does occur, no behavioral adjustments exist that are sufficient to avoid it” (p.11-12). There are perhaps parallels with Bateson’s concept of the double bind (Bateson et al, 1956), in which a person is subject to conflicting ‘injunctions’ (reinforcers or punishments) about what ‘right’ behaviour is, with the result that whatever he or she does, will be wrong (and perhaps punished) according to one of the injunctions.

Countertraps–what Platt (1973) suggests might be called ‘social fences’–also exist, where people avoid a behaviour because of (fear of) punishment or undesirable consequences, even though the behaviour would have been desirable. Often the reinforcer is a short-term, local gain, whereas the punishment is a longer-term effect, perhaps affecting a wider group or area: Platt cites Hardin’s tragedy of the commons (1968) as a well-known example of social trap with worldwide social and environmental consequences. Costanza (1987) examines how different kinds of social traps are responsible for a range of environmental problems.

Cross and Guyer’s (1980) taxonomy of social traps is potentially interesting for two reasons from a design perspective, since (in common with some of the cognitive biases and heuristics to be discussed in a later post), design could seek to help users avoid such traps, by redesigning situations to avoid them (hence influencing behaviour), or in some way exploit the effects to influence behaviour, if they are useful in some other way. In Cross and Guyer’s taxonomy, there are five classes of trap (including countertraps), together with a ‘hybrid’ category for traps comprising more than one of the others: time-delay traps, where the time lag between a behaviour and a reinforcer is too high for it to be effective, e.g. “the high school dropout who, avoiding the present pain and unpleasantness of school, finds himself later lacking the education which could have prepared him for a more rewarding job” (p.21); ignorance traps, in which people fail to make use of generally available knowledge when making a decision, but simply rely on immediate reinforcers or superstitions; sliding reinforcer traps, “patterns of behavior [which] continue long after the circumstances under which that behavior was appropriate have ceased to be relevant, producing negative consequences that would have been avoided easily had the behavior stopped earlier… The trap occurs because the rewards establish a habit which persists in the succeeding period” (p.25); externality traps, where “the reinforcements that are relevant to the first individual may not coincide with the returns received by the second… If Peter spends five minutes in a cafeteria line choosing his dessert, he does not suffer for it, but all the people waiting behind him certainly do” (p. 28); and collective traps, which involve tragedy-of-the-commons-type externality traps, involving reinforcers or consequences for multiple participants based on behaviour by one or more.

Cross and Guyer (1980, p.35) suggest ‘ways out’ of the traps, including their ‘conversion’ into trade-offs, “presenting the individual with a set of reinforcers that occur in close proximity to the behavior in question and which closely match the actual reward and punishment patterns that underly [sic.] the situation. The trap then becomes a simple choice situation in which rational and learned behavior are coincident. In some cases–particularly those of time-delay traps–this might be accomplished simply by altering the timing of reinforcers somehow bringing the punishment or proxy for the punishment into closer proximity with its causative behavior.” This could well be the principle behind a design approach to removing social traps, although it relies on being able to determine the structure of reinforcers and punishments which are affecting current behaviour, and somehow redesigning them accordingly.

Means and ends

Studer (1970, p.114-6) discussed applying operant conditioning principles to the design of environments (such as buildings), by treating them as “learning systems arranged to bring about and maintain specified behavioral topographies…What operant findings suggest, among other things, is that events which have traditionally been regarded as the ends in the design process, e.g., pleasant, exciting, comfortable, the participant’s likes and dislikes, should be reclassified. They are not ends at all, but valuable means, which should be skillfully ordered to direct a more appropriate over-all behavioral texture.”

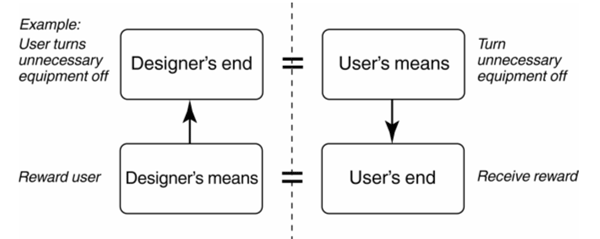

Reconsidering means and ends in this way may provide a useful alternative perspective on design for behaviour change. What may be an end from the user’s perspective (some kind of reward for turning off unnecessary equipment, perhaps) effectively becomes the means by which the designer’s end (the user turns off unnecessary equipment) might be influenced. The designer’s intended end is the user’s means for achieving the user’s intended end (Figure 1). If the end the user desires can be aligned with the means available to the designer, then the behaviour is reinforced. The mapping between ends and means (in both directions) may not seem to be one-to-one on first inspection. For example, the user’s end probably reflects an underlying need–not examined further in a behaviourist context–and likewise with the designer’s end. ‘Receiving feedback on my energy use in the office’–a favourite designer’s means for influencing reduced energy use–is probably rarely expressed as a desired end from a user’s point of view, but if successful at reinforcing conservation behaviour, it presumably fulfils some underlying psychological needs.

Figure 1. The designer’s end and user’s means may be seen as reflections of each other, and likewise with the designer’s means and user’s end. Based on ideas from Studer (1970).

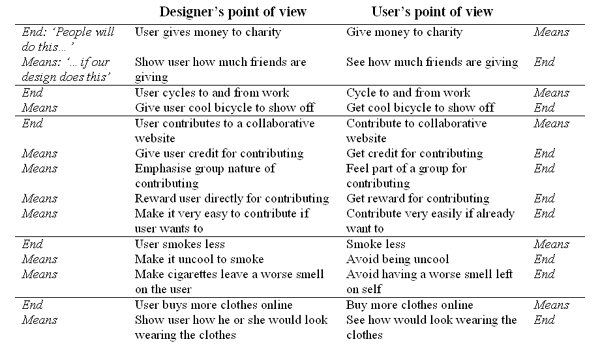

As an informal warm-up exercise in a workshop run at the Persuasive 2010 conference in Copenhagen, the author asked participants (designers and others involved with planning persuasive technology interventions) to map some intended ends relating to socially beneficial behaviour change, and some of the means they could think of to achieve them (Figure 2), using the labels ‘People will do this…’ and ‘…if our design does this’ for ends and means respectively.

As an informal warm-up exercise in a workshop run at the Persuasive 2010 conference in Copenhagen, the author asked participants (designers and others involved with planning persuasive technology interventions) to map some intended ends relating to socially beneficial behaviour change, and some of the means they could think of to achieve them (Figure 2), using the labels ‘People will do this…’ and ‘…if our design does this’ for ends and means respectively.

Viewing the designer’s means from the user’s point of view, as an end, sometimes involves the end being avoiding something rather than receiving something–i.e. negative reinforcement. It is debatable whether this has much value beyond being simply a warm-up exercise, but it does encourage designers to think about trying to align the ends desired by the user with the means available to the designer. Weinschenk (2011, p.120), in appealing to (mainly web) designers to consider operant conditioning as a strategy for influencing behaviour, asks, “Hungry rats want food pellets. What does your particular audience really want?”

Figure 2. Some means-end pairings suggested by workshop participants in Copenhagen.

Impact of behaviourism

Despite many of behaviourism’s principles having been adopted in other fields–not just animal training but therapeutic applications (e.g. with autism), athletic training, programmed learning via ‘teaching machines’ (e.g. Kay et al, 1968), to the emerging self-help industry (Rutherford, 2009)–it was largely supplanted in the mainstream of academic psychology by the ‘cognitive revolution’ (e.g. Crowther-Heyck, 2005), re-emphasising cognition as something to be understood as a determinant of behaviour. Pask (1969, p.21) refers to “the arid conflict between behaviourism and mentalism,” while Ericsson and Simon (1985, p.1) suggest that “[a]fter a long period of time during which stimulus-response relations were at the focus of attention, research in psychology is now seeking to understand in detail the mechanisms and internal structure of cognitive processes that produce these relations.” Images of Skinner-like scientist figures peering at rats pressing levers to obtain food, with the implication that this was what was proposed for humanity, to some extent cast a shadow of ‘the psychologist as manipulator’ over subsequent work on behaviour change–as Pryor (2002, p. xiii) notes, “to people schooled in the humanistic tradition, the manipulation of human behavior by some sort of conscious technique seems incorrigibly wicked.” Winter and Koger (2004, p.116) suggest that “[s]inister motives are attributed to those who would implement behavioral technology, and Skinner himself has been badly misrepresented and misunderstood as a cold, cruel scientist”.

Skinner’s Beyond Freedom and Dignity (1971), which proposed a new society–“the design of a culture” based on a scientifically refined “technology of behaviour” reinforcing only behaviours which were beneficial to humanity, many of which were essentially about ensuring environmental sustainability–was widely read as promoting a totalitarian future. Chomsky (1971) suggested that “there is nothing in Skinner’s approach that is incompatible with a police state in which rigid laws are enforced by people who are themselves subject to them and the threat of dire punishment hangs over all,” and this view persists, although Skinner eschews the use of punishment in favour of reinforcement. Slater (2004, p. 28) argues that “Skinner is asking society to fashion cues that are likely to draw on our best selves, as opposed to cues that clearly confound us, cues such as those that exist in prisons, in places of poverty. In other words, stop punishing. Stop humiliating. Who could argue with that?”

In a later work, Skinner (1986) offers an explicit ‘design for sustainable behaviour’ view of the possibilities of intelligent use of operant conditioning:

“[W]e have the science needed to design a world…in which people treated each other well, not because of sanctions imposed by governments or religions but because of immediate, face-to-face consequences. It would be a world in which people produced the goods they needed, not because of contingencies arranged by a business or industry but simply because they were “goods” and hence directly reinforcing. It would be a beautiful and interesting world because making it so would be reinforced by beautiful and interesting things… It would be a world in which the social and commercial practices that promote unnecessary consumption and pollution had been abolished… A designed way of life would be liked by those who lived it (or the design would be faulty).” (Skinner, 1986, p. 11-12)

Rutherford (2009, p.102) notes that Skinner himself designed and “constructed a variety of gadgets and devices that allowed him to control his environment, and thus his behavior. For example for many years Skinner rose early to write, often going directly from his bed to his desk. He would then switch on his desk lamp, which was connected to a timer. When his writing time was up, the timer would switch off his desk lamp, signaling the end of the writing period… For Skinner, setting up environmental contingencies for personal self-management was a natural outcome of behavior analysis.”

Regardless of the position of behaviourism in current academic psychological discourse, there are certainly elements which are relevant to design for behaviour change; indeed, the principles of reinforcement can be seen at work underneath many designed interventions even if they are not explicitly recognised as such. As Skinner (1971) argued (see quote opening this section), the environment shapes our behaviour both before and after we take actions, antecedent and consequence (even the absence of a perceived consequence is a consequence, in this sense). This is an important point, since much work in behaviour change focuses on one or the other. A system designed to suggest or cue particular behaviours, and then reward or acknowledge them, covers both intervention points, particularly given the fact that much interaction with products and systems is part of a regular schedule, and users do learn how to operate things through an ongoing cycle of reinforcement: behaviour change does not necessarily happen in a single step. The concept of variable or unpredictable reinforcement has potential design application in situations where a reward cannot be given every time, and also (as noted by Schell (2008)) in the design of games and game-like features in other interactions. The idea of shaping behaviour towards an intended state through progressive rewards for improvements in behaviour rather than every time has relevance in changing habits, which can be important in (for example) establishing exercise and healthier eating routines.

Winter and Koger (2004, p.118) propose what a behaviourist approach to a sustainable society might involve in relation to influencing more environmentally friendly transport choices, which suggests a mixture of different kinds of reinforcement designed into the system: “All the cues encouraging driving alone would be gone. Nobody would be climbing into a car alone, cars would be expensive to operate and roads would be less convenient. People would live within walking or biking distance to their workplace, commute in groups, or use public transportation… Schools and shops would be arranged close by, allowing people to complete errands without the use of a car… We wouldn’t try to change out of moral responsibility or pro-environment attitudes. We would emit environmentally appropriate behaviors because the environment had been designed to support them.”

Implications for designers

▶ Behaviourism is no longer mainstream psychology, but some of the principles could have potential application in design for behaviour change▶ There is a recognition that the environment shapes our behaviour both before and after we take actions–a useful insight for designing interventions

▶ There is also a recognition that behaviour change does not necessarily happen in a single step, but as part of an ongoing cycle of shaping

▶ Where cognition cannot be understood or examined, modelling users in terms of stimuli and responses may still offer valuable insights

▶ Positive and negative reinforcement, and positive and negative punishment can all be implemented via designed features, and often underlie designed interventions without being explicitly named as such

▶ Schedules of reinforcement can be varied (e.g. made unpredictable) to drive continued behaviour

▶ Design could either exploit or help people avoid ‘social traps’ where both reinforcement and punishment exist, or reinforcement is currently misaligned with the behaviour, converting them into ‘trade-offs’ which more closely match the intended behavioural choices

▶ Considering means and ends may provide a useful perspective on design for behaviour change. The end from the user’s perspective effectively becomes the means by which the designer’s end might be influenced

References

Ashby, W.R. (1956) An Introduction to Cybernetics. Chapman & Hall, London

Bateson, G., Jackson, D.D., Haley, J. and Weakland, J.H. (1956) Toward a Theory of Schizophrenia. Behavioral Science I(4)

Bateson, G. (1969) Metalogue: What Is an Instinct? In Bateson, G. (1969) Steps to an Ecology of Mind. University of Chicago Press, Chicago

Burgess, A. (1962) A Clockwork Orange. Heinemann, London

Chomsky, N. (1971) The Case Against B.F. Skinner. The New York Review of Books, 30 Dec 1971

Costanza, R. (1987) Social traps and environmental policy. Bioscience 37(6)

Cross, J.G. and Guyer, M.J. (1980) Social Traps. University of Michigan Press, Ann Arbor

Crowther-Heyck, H. (2005) Herbert A. Simon: The Bounds of Reason in Modern America. Johns Hopkins University Press

Ericsson, K.A. and Simon, H.A. (1985) Protocol Analysis: Verbal Reports as Data. MIT Press

Hardin, G. (1968) The Tragedy of the Commons. Science 162.

Kay, H., Dodd, B. and Sime, M.E. (1968) Teaching Machines and Programmed Instruction. Penguin

Koestler, A. (1967) The Ghost in the Machine.

Pask (1969) The meaning of cybernetics in the behavioural sciences (The cybernetics of behaviour and cognition; extending the meaning of “goal”). In Rose, J. (ed.) (1969) Progress of Cybernetics, Volume 1. Gordon and Breach

Pavlov, I. (1927) Conditioned Reflexes: An Investigation of the Physiological Activity of the Cerebral Cortex. Translated by Anrep, G.V. Oxford University Press

Platt, J. (1973) Social Traps. American Psychologist, 28

Pryor, K. (2002) Don’t Shoot the Dog: The New Art of Teaching and Training. Interpet

Rutherford, A. (2009) Beyond the Box: B.F. Skinner’s Technology of Behavior from Laboratory to Life, 1950s-1970s. University of Toronto Press

Schell, J. (2008) The Art of Game Design. Morgan Kaufmann

Skinner, B.F. (1953) Science and Human Behavior. The Free Press, New York.

Skinner, B.F. (1971) Beyond Freedom and Dignity.

Skinner, B.F. (1986) Why we are not acting to save the world. In Skinner, B.F. Upon further reflection. Prentice-Hall

Slater, L. (2004) Opening Skinner’s Box: Great Psychology Experiments of the Twentieth Century. Bloomsbury

Sparks, J. (1982) The Discovery of Animal Behaviour. Collins.

Studer, R.G. (1970) The Organization of Spatial Stimuli. In Pastalan, L.A. and Carson, D.H. (eds.), Spatial Behavior of Older People. University of Michigan Press, Ann Arbor

Watson, J.B. (1913) Psychology as the behaviorist views it. Psychological Review, 20

Weinschenk, S (2011) 100 Things Every Designer Needs to Know About People. New Riders

Winter D. du N. and Koger, S.M. (2004) The Psychology of Environmental Problems. Lawrence Erlbaum Associates

B.F. Skinner photo from http://www.pbs.org/wgbh/aso/databank/entries/bhskin.html

Banksy Rat photo from DG Jones on Flickr, licensed under CC-BY-NC

Pingback: Feedback loops and the new behaviourism | Dr. Marco Kalz

Pingback: Behaviorism as a Design Lens « UX Kuali

Pingback: Behaviorismo para designers :: Luciano Lobato

Pingback: Design and behaviourism: feedback and social traps • Intense Minimalism

Pingback: Architecture, urbanism, design and behaviour: a brief review | Design with Intent

Pingback: Architecture, urbanism, design and behaviour: a brief review … |

Pingback: Tools for ideation and problem solving: Part 1 | Architectures | Dan Lockton