In the earlier days of this blog, many of the posts were about code, in the Lawrence Lessig sense: the idea that the structure of software and the internet and the rules designed into these systems don’t just parallel the law (in a legal sense) in influencing and restricting public behaviour, but are qualitatively different, enabling distinct forms of affordance and constraint. Designers (and developers) — or in many cases those overseeing the process — in this sense potentially wield a lot of (political) power.

My aim initially, arising from my Master’s dissertation, was to chronicle and investigate something like this, but expanded to include ‘architectures of control’ in physical architecture and products as well as digital ones. The blog was a wonderful way to continue this research informally; readers’ comments convinced me that this was an interesting, under-explored subject.

While many examples were socially ‘negative’ — e.g. anti-homeless benches — it seemed that similar techniques could be applied in more socially beneficial ways. B.J. Fogg’s Persuasive Technology offered a template for designing systems to help people behave in ways they wanted (exercising more, eating more healthily, and so on), and this more optimistic approach suggested that maybe I could bring together techniques into a form of use to other designers who wanted to help people, society, and the environment. That led to the PhD, and the Design with Intent toolkit, and gradually the focus drifted away from the ‘code’ angle.

A couple of weeks ago, I was privileged to be part of Submit: Code as Control in Online Spaces [PDF], a workshop at the Good School in Hamburg, organised by Sebastian Deterding (a leading voice on intelligent approaches to ‘gamification’), Jan-Hinrik Schmidt, Stephan Dreyer, Nele Heise, and Katharina Johnsen from the Hans Bredow Institute for Media Research. Eighteen participants, deliberately chosen (curated?) to represent disciplines such as interaction design, games, economics, information science, human geography, media studies and law, spent two days “locked in a room” (as Jen Whitson put it), well-oiled with Club-Mate and Fritz-Kola, exploring questions around code through a series of collaborative exercises. Nele has Storified tweets and photos from the two days, while Jen Whitson has a great blog post going into more detail.

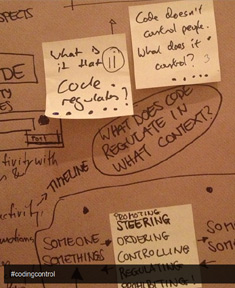

In small groups and all together, we looked at questions* including ‘code literacy’ (who needs to know how code works? what should they know? how should it be taught?), the boundaries of what exactly code can be considered to regulate, intentionality (does it matter? – something I’ve sort of looked at before), historically relevant perspectives (Jacquard loom as the thin end of a long wedge), ‘war stories’ of code’s unexpected effects, and the rise of self-regulation via code as a kind of counterpart to Quantified Self approaches and commitment devices. Via a “dinner of ridiculously bold claims”, we considered the extents of privacy, and personal resistance to control, among other issues.

Left photo by Nicolas Nova

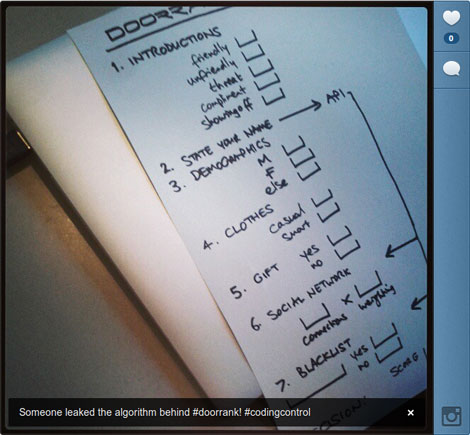

With Theo Röhle, R. Stuart Geiger and Malte Ziewitz, I helped devise Doorrank, a kind of “role-playing algorithm game”, centred on the idea of a programmable robot nightclub doorman (player 1), an API which could get information from elsewhere (player 2), some kind of designer / developer / censor / nightclub boss / security authority (player 3) making decisions about what rules to code into the doorman to decide who is let in, and who isn’t, and guests trying to get into the club (other players).

It wasn’t exactly fully resolved in the time we spent on it, but the idea was that by “imagining you’re a software object” (as Nicolas Nova put it), something along these lines could be used as an exercise to help highlight the power, and social consequences, of apparently arbitrary (and often hidden) algorithms in everyday life — and how quickly the idea of tricking the doorman (hacking the system) arises. Jen pointed out the game’s parallels with Memento Mori’s Parsely Games; the initial idea I had was something similar to the explicitly behaviour change-focused ‘Rules of interaction’ exercise I’ve used in a couple of workshops.

I went away with a list of new perspectives and angles to investigate, potentially in collaboration with some very clever people, bridging disciplines in increasingly diverse ways. Thanks again to all the organisers and participants for a very interesting few days.

*A vague thing which I suggested as something to explore — but with which I could barely even work out where to start — is the idea of representing code (or rules in general) in visual or tactile ways which would allow their impact to be seen or felt ‘directly’ (whatever that means). For example, a low doorway could be seen as a physical representation of a rule that allows shorter people through and restricts taller people, or Spear’s Spellmaster tiles as a physical representation of spelling rules. I am fascinated and inspired by Mark Changizi’s Escher Circuits and David Cox’s FLIPP Explainers, and the idea of physical “perceptual mechanisms” as a form of embodied cognition, along with some of the more visual forms that analogue computing has taken over the years. But I don’t know quite where this idea could lead, and what exactly it would be useful for.

P.S. Sebastian’s recent presentation on Policy Making as Game Design is also very relevant here.