Part 1 | Part 2 | Part 3 | Part 4 | Part 5 (coming soon)

Continued from part 3

This series is looking at what design techniques/mechanisms are applicable to guiding a user to follow a process or path, performing actions in a specified sequence. The techniques that seem to apply with this ‘target behaviour’ fall roughly into three ‘approaches’, which if anything describe the mindset a designer might have in approaching the ‘problem’: i.e., the techniques suggested may well apply more than one at a time to many designed solutions, but each reflects a particular way of looking at the problem. In this post, I’m going to examine what I’ve called the Persuasive Interface approach, which draws heavily from the work of BJ Fogg, though applied specifically to this particularly target behaviour.

As noted before, a lot of this may seem obvious – and it is obvious: we encounter these kinds of design techniques in products and systems every day, but that’s part of the point of this bit of the research: understanding what’s out there already.

Persuasive Interface approach

The design of the interface (however loosely defined) of a product or system can be an important factor encouraging users to follow a process or path in a specified sequence. Interfaces can use a number of psychological persuasion mechanisms (outlined by B J Fogg) – a ‘human factors’ approach – in conjunction with the technical capabilities of the interface itself. Some mechanisms applicable to this behaviour, then, are – as well as the Interface capabilities themselves – Tunnelling, Suggestion (kairos), Self-monitoring and Operant conditioning.

Interface capabilities

What I mean by this – there is probably a better term for it waiting to be coined – is the choice of degree/type/format of information or feedback that an interface can provide a user. Clearly, an interface with few capabilities for actually providing the user with feedback, or worse, inappropriate feedback capabilities (e.g. a car speedometer only telling you your mean speed for the journey, rather than the instantaneous velocity), has a different (probably much worse) chance for affecting users’ behaviour. (Which is why having the electricity meter in a cupboard, and looking at it four times a year, is not very persuasive in energy-saving terms.)

Careful selection of what information, feedback and control capabilities are designed into a system, from a technical point of view, can have a major effect on user behaviour. To some extent, the addition of an interface to a system which did not previously have one may drive behaviour change in itself. Technical decisions about the types of interaction possible between an interface and the underlying system or product, and between the user and the interface – the capabilities of the interface – determine how the user experience will work: if a system is not designed with a function for monitoring progress through a sequence of operations, for example, then the possibility of indicating this via an interface is not possible, or far more difficult. Providing the infrastructure for a meaningful and useful interface for a system is a design decision which can shape or even determine the system’s use characteristics.

Self-monitoring

Self-monitoring, as defined by BJ Fogg, is an interface design mechanism which explicitly links feedback of information to the user’s actions: the user can monitor his or her behaviour and the effect that this has on the system’s state. As applied to helping a user follow a process or path in sequence, it makes sense for the self-monitoring to involve real-time feedback – so that the ‘correct’ next step can immediately be taken if the feedback indicates that this is what should happen – but in other contexts, ‘summary’ monitoring may also be useful, such as giving the user a report of his or her behaviour and its efficacy over a certain period.

Even giving a user the ability to self-monitor where previously there was none can help change behaviour: for example, providing a home electricity meter in an immediately visible position is likely to be more persuasive at inspiring energy saving – by increasing awareness of consumption – than having the meter hidden away.

Example: LinkedIn‘s ‘Profile Completeness’ indicator allows users to monitor their ‘progress’, driving them to follow a specified sequence of actions

Example: LinkedIn‘s ‘Profile Completeness’ indicator allows users to monitor their ‘progress’, driving them to follow a specified sequence of actions

Tunnelling

Tunnelling is a ‘guided persuasion’ mechanism outlined by Fogg, in which a user ‘agrees’ to be routed through a sequence of pre-specified actions or events:

When you enter a tunnel, you give up a certain level of self-determination. By entering the tunnel, you are exposed to information and activities you may not have seen or engaged in otherwise. Both of these provide opportunities for persuasion.

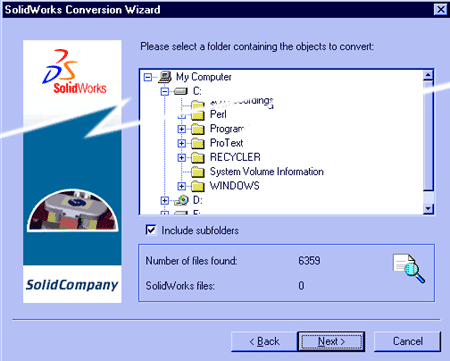

Applying this mechanism involves treating the user as a captive audience: presenting only the ‘correct’ sequence of actions, step by step, with any user choices being limited, and the commitment to following the process being a motivator to accept the advice or opinions presented. Fogg uses the example of people voluntarily hiring personal trainers to guide them through fitness programmes. Some software wizards provide an interface analogy, where the intention is not merely to simplify a process, but additionally to shape the user’s choices.

Example: This software wizard helps the user ‘tunnel’ through a file conversion process in the right order.

Example: This software wizard helps the user ‘tunnel’ through a file conversion process in the right order.

Suggestion (kairos)

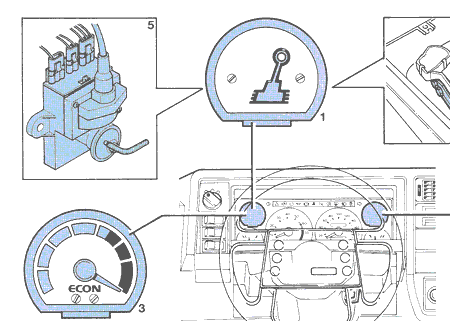

Suggestion (kairos) involves suggesting a behaviour to a user at the ‘opportune’ moment, i.e. when that behaviour would be the most efficient or otherwise most desirable step to take (either from the user’s point of view, or that of another entity). In the context of helping a user follow a process or path in a specified sequence, this is very easily implemented: the system can simply ‘cue’ the desired next step in the sequence by alerting or reminding the user, whether that comes through indicators on the interface itself, or some other kind of alert.

Suggestions can also help steer users away from incorrect behaviour next time they use the system, even if it’s too late this time; when presented at the point where a mistake or incorrect step is obvious, advice on what to do next time may be more easily recalled. The key to this mechanism is that the suggestion is timed or triggered at the right point in the sequence, so that its effect is most persuasive. This may imply a system which monitors the user’s behaviour and responds accordingly via the interface, or it might be realised by an interface designed so that, by helping the user keep track of where he or she is in a sequence of operations, the suggestions only appear or are visible at the right point.

Example: This Gearchange Indicator light, fitted to certain Volvo models, suggests the most efficient moment to change gear, based on measurement of engine RPM and throttle position. Thanks to Mac MacFarlane for the image.

Operant conditioning

Controversial, certainly, but reinforcing target behaviours through rewards or punishment may be applicable where we want the user to perform a (perhaps complex) behavioural sequence repeatedly, so that it becomes habit, or successive iterations approximate the intended sequence. But it is unlikely to be effective in encouraging users to follow one-off sequences, where actual direction (e.g. suggestion, tunnelling) is far more useful. In general, punishing users for mistakes is an undesirable way of designing.

In part 5, we’ll review the approaches we’ve looked at, and see how one might actually go about choosing among them to design a new product or system with this particular target behaviour.