(introducing behavioural heuristics)

EDIT (April 2013): An article based on the ideas in this post has now been published in the International Journal of Design – which is open-access, so it’s free to read/share. The article refines some of the ideas in this post, using elements from CarbonCulture as examples, and linking it all to concepts from human factors, cybernetics and other fields.

There are lots of models of human behaviour, and as the design of systems becomes increasingly focused on people, modelling behaviour has become more important for designers. As Jon Froehlich, Leah Findlater and James Landay note, “even if it is not explicitly recognised, designers [necessarily] approach a problem with some model of human behaviour”, and, of course, “all models are wrong, but some are useful”. One of the points of the DwI toolkit (post-rationalised) was to try to give designers a few different models of human behaviour relevant to different situations, via pattern-like examples.

I’m not going to get into what models are ‘best’ / right / most predictive for designers’ use here. There are people doing that more clearly than I can; also, there’s more to say than I have time to do at present. What I am going to talk about is an approach which has emerged out of some of the ethnographic work I’ve been doing for the Empower project, working on CarbonCulture with More Associates, where asking users questions about how and why they behaved in certain ways with technology (in particular around energy-using systems) led to answers which were resolvable into something like rules: I’m talking about behavioural heuristics.

Behavioural heuristics

The term has some currency in game theory, other economic decision-making and even in games design, but all I really mean here is rules (of thumb) that people might follow when interacting with a system – things like:

▶ If someone I respect read this article, I should read it too

▶ If this email claiming to be from my bank uses language which makes me suspicious, I should ignore it

▶ If I’ve read something that makes me look intelligent, I should tell others

▶ If that Go Compare advert comes on, I should press ‘mute’

▶ If the base of my coffee cup might be wet, I should put it on something rather than directly on the polished wooden table

▶ If, when asked which of two cities has a bigger population, I have only heard of one of them, I should choose that one

▶ If my friend posts that she has a new job, I should congratulate her

▶ If there’s a puddle in front of me, I should walk round it

▶ If there’s a puddle in front of me, I should jump in it

▶ If I’m short of time, I should choose the brand name I recognise

▶ If I have some rubbish, and there’s a recycling bin nearby, I should recycle it

▶ If I have some rubbish, and there isn’t a recycling bin nearby, I should put it in a normal bin

▶ If that bench is wet or dirty, I should sit somewhere else

▶ If lots of my friends are using this app, I should try it too

▶ If there are lots of pairs of seats empty on the train, I should sit in one of them rather than sitting next to someone already occupying one of a pair

▶ If I can’t see the USB logo on the top of this connector, I should turn it over before trying to plug it in

▶ If I can’t get the USB cable to plug in properly, I should force it

▶ If seats are positioned round a table, I should sit at the table

▶ If I’m trying to lose weight, I should try to choose food with less fat in it

▶ If this envelope has HM Revenue & Customs on the back, I should open it

▶ If this envelope is from BT and printed on shiny paper, I should shred it immediately without bothering to open it

▶ If this website asks me to fill in a survey, I should click cancel immediately

▶ That urinal spacing thing. You know what I mean.

These are a mixture of instinctive or automatic reactions (a kind of ifttt for people) and those with more deliberative processes behind them: the elephant and rider or Systems 1 and 2 or whatever you like. Some are more abstract than others; most involve some degree of prior learning, whether purely through conditioning or a conscious decision, but in practice can be applied quickly and without too much in-context deliberation (hence at least some are ‘fast and frugal’, in Dan Goldstein and Gerd Gigerenzer’s terms). Some heuristics could lead to cognitive biases (or vice versa); some involve following plans, some are more like situated actions. And of course not all of them are true for everyone, and they would differ in different situations even for the same people, depending on a whole range of factors.

Truth tables for people

Regardless of the backstory, though, each of these rules or heuristics potentially has effects in practice in terms of the actual behaviour that occurs. They are almost like atomic black boxes of action, transducers* which when connected together in specific configurations result in ‘behaviour’.

We might construct ‘behavioural personas’ which put together compatible (whatever that means) heuristics into persona-like fictional users, described in terms of the rules they follow when interacting with things, and both (admittedly crudely) simulate** their behaviour in a situation, and, maybe more importantly, design systems which take account of the heuristics that users are employing.

If we know that our fictive user is following a “If someone I respect read this article, I should read it too” heuristic, then designing a system to show users that people they respect (however that’s determined) read or recommended an article ought to be a fairly obvious way to influence the fictive user to read the article. If we know that he or she also follows related heuristics in other parts of life, e.g. the “If I’ve read something that makes me look intelligent, I should tell others” rule, then this action could also be incorporated into the process.

There are two main objections to this. One: it’s obvious, and we do it anyway; and two: treating people like electronic components is horrible / grotesquely reductive / etc. I don’t disagree with either, but am nevertheless interested in exploring the possibilities of using this kind of modelling, simple and lacking in nuance as it is, to provide a way of navigating and exploring the many different ways that design can influence behaviour. If we could do contextual user research with this kind of heuristic as a unit of analysis, uncovering how many users in our situation are likely to be following different heuristics, we could design systems which are not just segmented but tailored much more directly to the things which ‘matter’ to people in terms of how they behave.

Trying it out: thank you, Dublin guinea-pigs

At Interaction 12 last week in Dublin, 41 wonderful people from organisations including Adaptive Path, Google and Chalmers University took part in a workshop exploring the idea of these heuristics and how they might be used in design for behaviour change.

What we did first was a kind of rapid functional decomposition (in the Christopher Alexander sense) on a few examples where systems have been designed expressly to try to influence user behaviour in multiple ways.

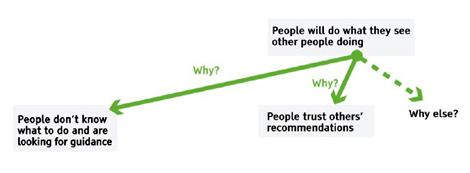

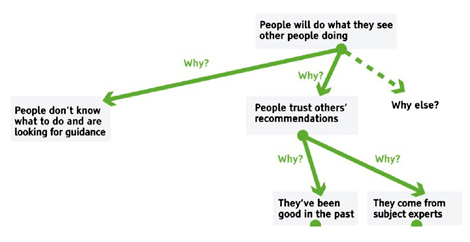

The example I worked through first though was a simple decomposition of Amazon’s ‘social proof’ recommendation system: the point was to try to think through some of the ‘assumptions’ about behaviour that can be read into the design, and using a kind of laddering / Five Whys process, end up with statements of possible heuristics.

So with the Amazon example here, what are the assumptions? Basically, what assumptions are present, that if true would explain how the system ‘works’ at influencing users’ behaviour? What I have glibly classified as simply social proof contains a number of assumptions, including things like:

▶ People will do what they see other people doing

▶ People want to learn more about a subject

▶ People will buy multiple books at the same time

And many others, probably. But let’s look in more detail at ‘People will do what they see other people doing’: Why? Why will people do what they see other people doing? If we break this down, asking ‘Why?’ a couple of times, we get to tease out some slightly different possible factors.

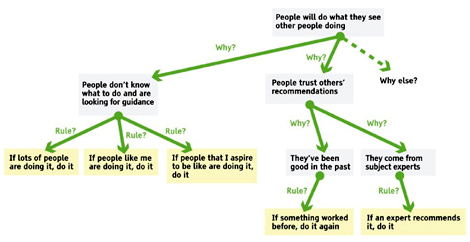

After a couple of iterations it’s possible to see some actual heuristics emerge:

Of course there are many possible heuristics here, but for the five uncovered, it’s not too difficult to think of design patterns or techniques which are directly relevant:

|

▶ If lots of people are doing it, do it |

Show directly how many (or what proportion of) people are choosing an option |

|

▶ If people like me are doing it, do it |

Show the user that his or her peers, or people in a similar situation, make a particular choice |

|

▶ If people that I aspire to be like are doing it, do it |

Show the user that aspirational figures are making a particular choice |

|

▶ If something worked before, do it again |

Remind the user what worked last time |

|

▶ If an expert recommends it, do it |

Show the user that expert figures are making a particular choice |

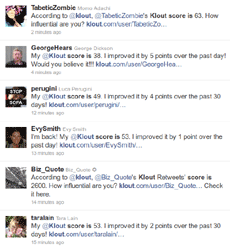

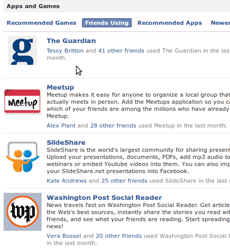

There’s nothing there that isn’t obvious, but I suppose my point is that each heuristic implies a specific design feature, and the process of unpicking what the actual decision-points might involve gives us a much more targeted set of design possibilities than simply saying ‘put some social proof there’. Depending on the heuristics uncovered, it might be that simple majority preference (the Whiskas ad), irritating pseudo-authority-based messaging (Klout), friend-based recommendation (Facebook apps), peer voting (Reddit) or even celebrity/expert endorsement (John Stalker and Drummer endorsing awnings) could match individual users’ heuristics better.

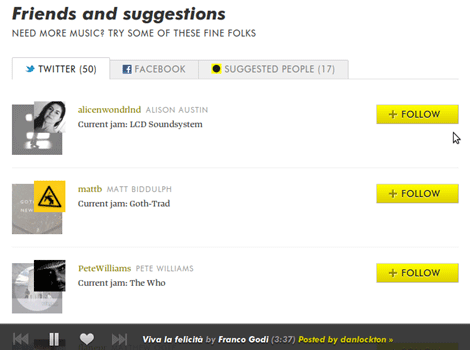

Sometimes a service will use more than one, to try to satisfy multiple heuristics, or perhaps because the designers are not sure which heuristics are really important to the user (e.g. the This Is My Jam example below). In some ways, this process is approaching the kind of ‘persuasion profiling’ being pioneered by Maurits Kaptein, Dean Eckles and Arjan Haring’s Persuasion API, although from a different direction.

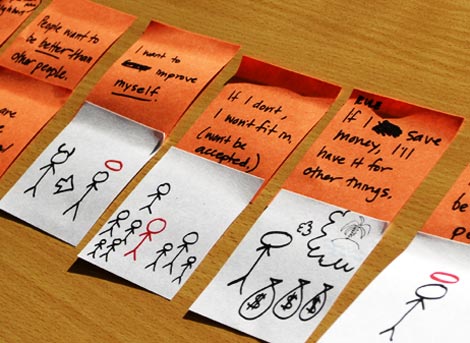

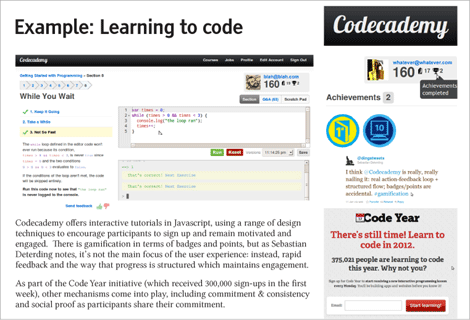

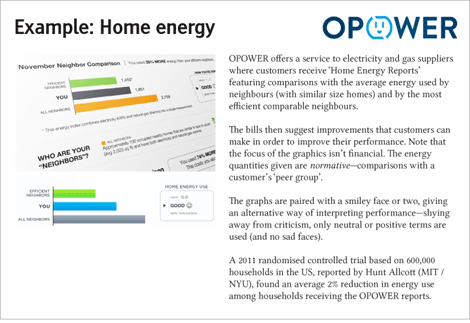

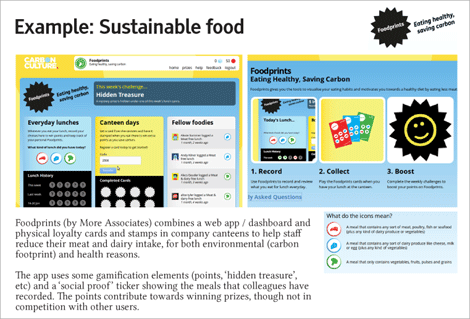

In the workshop, groups did a similar decomposition on three examples: Codecademy, Opower and Foodprints, part of More Associates’ CarbonCulture platform – the introductory material is reproduced below. [PDF of this material]

For each of these, groups extracted a handful of statements of possible heuristics – for example, for Opower, these included:

▶ If my neighbour can do it, I can do it

▶ If life’s a competition, I want to win it

▶ If I set myself goals, I want to meet them

▶ I don’t want to be the ‘weak link’, so I should do it

▶ I want to be ‘normal’, so I should do it

▶ [If I do it] I will be better than other people

▶ If I get apprecation from others, I will continue to do it

▶ If it stops me being the ‘bad guy’, I will do it

▶ If it stops me feeling guilty, I will do it

▶ [If I do it] I will improve myself

▶ If I don’t do it, I won’t fit in

▶ If I save money, I’ll have it for other things

▶ [If I do it] I will be a ‘good’ person

▶ [If I don’t do it] bad things will happen

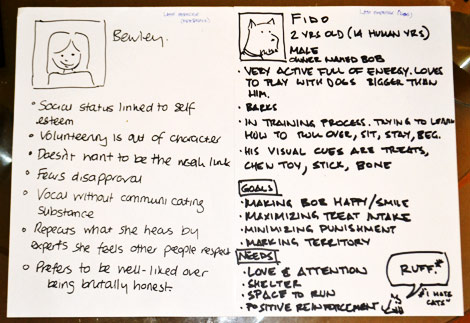

We went on to swap some of the heuristics among groups, and build them up into relatively plausible (if completely fake) personas, ranging from a “goth who doesn’t want to do what others do”, to Fido, a guide dog intent on helping his partially-sighted owner Bob (as SVA’s Lizzy Showman mentions here).

In turn, the groups then used the DwI cards as inspiration to generate some possible concepts in response to a brief about keeping that person (or dog) engaged and motivated as part of a behaviour change programme at work, around behaviours such as exercise, giving better feedback and so on. Finally, groups acted these out (photo below shows Fido and Bob!).

Where does all this fit into a design process?

What was the point of all this? The aim, really, is ultimately to provide a way of helping designers choose the most appropriate methods for influencing user behaviour in particular contexts, for particular people. This is what much design for behaviour change research is evolving towards, from Stanford’s Behaviour Wizard to Johannes Zachrisson’s development of a framework.

I would envisage that with user research framed and phrased in the right way, observation, interviews and actual behavioural data, it would be possible to extract heuristics in a form which are useful for selecting design patterns to apply. While in the workshop we ‘decomposed’ existing systems without doing any real user research, doing this alongside would enable the heuristics extracted to be compared and discrepancies investigated and resolved. The redesigned system could thus match much better the heuristics being followed by users, or, if necessary, help to shift those heuristics to more appropriate ones.

Ultimately, each design pattern in some future version of the DwI toolkit will be matched to relevant heuristics, so that there’s at least a more reasoned (if not proven) process for doing design for behaviour change, using heuristics as a kind of common currency between user behaviour and design patterns: user research → extracting heuristics → matching heuristics to design patterns → redesigning system by applying patterns → testing → back to the start if needed

In the meantime, my next step with this is to do some more extraction of heuristics from actual behavioural data for some particular parts of CarbonCulture, and (as my job requires) put this process into a more formal write-up for an academic journal. I will try to make some properly theoretical bridges with the heuristics work of Gerd Gigerenzer, Dan Goldstein and (as always) Herbert Simon. But if you have any thoughts, suggestions, objections or otherwise, please do get in touch.

Thanks to everyone who came to the workshop, and thanks too to the Interaction 12 organisers for an impressively organised conference.

* In reality, the rules have to be able to degrade if the conditions are not met: people are maybe following nested IF…THEN…ELSE loops rather than individual IF…THEN rules. Or perhaps more likely (this thought occurred while talking to Sebastian Deterding on a bus from Dun Laoghaire last week) a kind of CASE statement – which would take us into pattern recognition and recognition-primed decision models…

**Matt Jones suggests I should read Manuel deLanda’s Philosophy and Simulation, which fills me with both excitement and fear…

Image sources: ‘If…’ movie poster; Whiskas ad; Nationwide awnings

Pingback: Behavioural Heuristics | Design with Intent | Engagement Design | Scoop.it

Pingback: Putting people first » Dan Lockton on behavioural heuristics

Pingback: Lu chez Zengo : semaine du 6 février — Les Bonnes Fréquentations

Pingback: If… | Design with Intent | UX Design | Scoop.it

Pingback: If… | Design with Intent | relevant entertainment | Scoop.it

Pingback: If… | Design with Intent | Progettare l'improvvisazione | Scoop.it

Pingback: E’ arrivata la Social propaganda. « News from T-shirts

Pingback: If… | Design with Intent | AttivAzione alla TrasformAzione | Scoop.it

Pingback: New and Improved « Meet Clara Kuo

Pingback: Mind hacks, recommendations and behavioral heuristics: 2012′s top articles on online consumer behavior | Mats Stafseng Einarsen

Pingback: Report: Most people just trying to get by | Architectures | Dan Lockton

Pingback: Some news, mostly around writing | Architectures | Dan Lockton