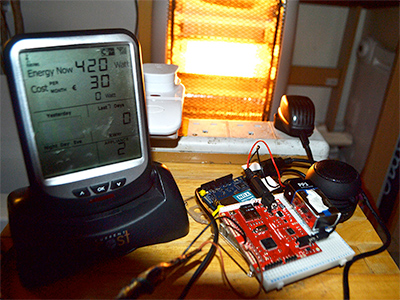

Powerchord 1, housed in a Poundland lunchbox. In the video, you see a laptop (~40W) being plugged in, with, from 10 seconds, the Powerchord kicking in with relatively gentle blackbird song. (The initial very quiet birdsong at 4-8 seconds is actual blackbirds singing in the hedge outside!) Then at 30 seconds, a 400W electric heater is switched on, and the birdsong increases in emphasis accordingly. At 49 seconds, a second 400W element is switched on and the birdsong increases further in volume. There is some background noise of rain on the shed roof.

In the previous post, I introduced the exploration Flora Bowden and I have been doing of sonifying energy data, as part of the SusLab project. The ‘Sound of the Office’ represented twelve hours’ electricity use by three items of office infrastructure — the kettle, a laser printer, and a gang socket for a row of desks — turned into a 30-second MIDI file.

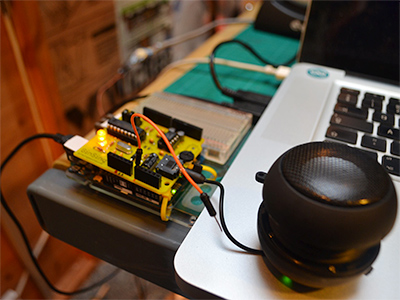

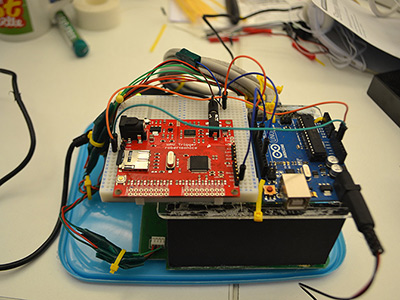

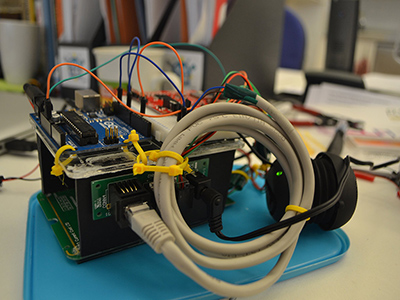

Going further with this idea, I’ve been playing with taking it into (near) real-time, producing sound directly in response to the electricity use of multiple appliances. Powerchord seemed too good a name to pass up. Again using CurrentCost IAMs, transmitting data to a CurrentCost EnviR, this system then uses an Arduino to parse the CurrentCost’s XML stream*, and trigger particular audio tracks via a Robertsonics WAV Trigger. I tried a GinSing to start with, which was a lot of fun, but the WAV Trigger offered a more immediate way of producing suitable sounds.

There are lots of questions – what sort of sounds should the system produce? How should they relate to the instantaneous power consumption? Should they be linear or some other relationship? Should it be an ‘alarm’, alerting people to unusual or particularly high energy use, or a continuous soundtrack?** I decided in this case, that I wanted to build on a number of insights and anecdotes that had arisen during discussion of representing energy use in different ways:

- one of the householders with whom we’re working had mentioned in an interview that she could tell, from the sound of the washing machine, what stage it was at in its cycle, even from other rooms of the house.

- a remark from Greg Jackson of Intel’s ICRI Cities that the church bells he could hear from his office, chiming every 15 minutes, helped him establish a much better sense of what time it was, even when he didn’t consciously recall listening out for them

- the amount of birdsong I can hear (mostly sparrows and blackbirds) both lying in bed early in the morning, and from the hedge behind the garden shed where I work when I’m working from home. Reinforced by a visit to the London Wetland Centre in Barnes a couple of weeks ago

- the uncanniness of the occasional silence as the New Bus for London or other hybrid buses pull into traffic, compared with the familiarity of increasing revs for acceleration

- the multi-sensory plug sockets produced by Ted Hunt during our ‘Seeing Things’ student workshop last year

- the idea of linking time and daily routines and patterns to energy use, e.g. Loove Broms & Karin Ehrnberger’s Energy AWARE Clock at the Interactive Institute.

- the notion of soundscapes, e.g. Dr Jamie Mackrill’s work at WMG with understanding and manipulating hospital soundscapes.

- a recording I made out of the window of my hotel room, on a trip to Doha, of the continuous sound of construction work, interspersed with occasional pigeons

- the popularity of things like Mashup: Jazz Rain Fire

- Gordon Pask’s Ear and attempts to recreate it

- the ‘clacking’ sound of split flap displays (e.g. mechanical railway departure boards) as an indicator that the display has updated, as Adrian McEwen and Hakim Cassimally point out in their Designing the Internet of Things.

All of this led to using birdsong as the sounds triggered – in the video here, blackbirds – at different intensities of song (volume, and number of birds) depending on the power measured by the CurrentCost, at 7 levels ranging from 5W to 1800W+. The files were adapted, in Audacity, from those available at the incredible Xeno-Canto – these include Creative Commons-licensed recordings by Jerome Fischer, Jordi Calvert, Roberto Lerco, Michele Peron, David M and Krzysztof Deoniziak. I also made sets of files using house sparrows, and herring gulls, which proved particularly irritating when testing in the office.

The initial intention was to use multiple IAMs, with different birdsong for each appliance, played polyphonically if appliances are being used at the same time. This is the aim for the next version (and I’ll publish the code), but was stymied in this case by 1) my misunderstanding of the CurrentCost XML spec, and 2) a failed IAM, which conspired together to limit this particular version to one IAM (with multiple appliances plugged into it), at least to have it ready to be shown at a couple of events last week. The prototype you see/hear here, in all its Poundland lunchbox-encased glory, was demonstrated by Flora Bowden and me at the V&A Digital Futures event at BL-NK, near Old Street, and at the UK Art Science Prize ‘Energy of the Future’ event at the Derby Silk Mill. It was more a demo to show that it could work at all than anything particularly impressive.

What’s the overall aim with all this? It’s an exploration of what’s possible, or might be useful, in helping people develop a different kind of understanding of energy use, and the patterns of energy use in daily life – not just based on on numerical feedback. If it’s design for behaviour change, it’s aiming to do so through increasing our understanding of, and familiarity with, the systems around us, making energy use something we can develop an instinctual feeling for, much like the sound of our car’s engine – once we’re familiar with it – effectively tells us when to change gear.

The next version will, hopefully, work with multiple appliances at once, playing polyphonic birdsong, and be somewhat better presented – I’ll post the code and schematics too – and, later in the year, might even be tested with some householders.

*Using a modified version of Colin R Williams’ code, in turn based on Francisco Anselmo’s.

**The distinction between model-based sonification and other approaches such as parameter-mapping sonification is useful here – many thanks to Chris Jack for this.

Thank you to Ross Atkin and Jason Mesut for suggestions! Blackbird photo by John Stratford, used under a CC licence.