Mac as a giant dongle

At Coding Horror, Jeff Atwood makes an interesting point about Apple’s lock-in business model:

It’s almost first party only– about as close as you can get to a console platform and still call yourself a computer… when you buy a new Mac, you’re buying a giant hardware dongle that allows you to run OS X software.

…

There’s nothing harder to copy than an entire MacBook. When the dongle — or, if you prefer, the “Apple Mac” — is present, OS X and Apple software runs. It’s a remarkably pretty, well-designed machine, to be sure. But let’s not kid ourselves: it’s also one hell of a dongle.If the above sounds disapproving in tone, perhaps it is. There’s something distasteful to me about dongles, no matter how cool they may be.

Of course, as with other dongles, there are plenty of people who’ve got round the Mac hardware ‘dongle’ requirement. Is it true to say (Ã la John Gilmore) that technical people interpret lock-ins (/other constraints) as damage and route around them?

Social status-based DRM

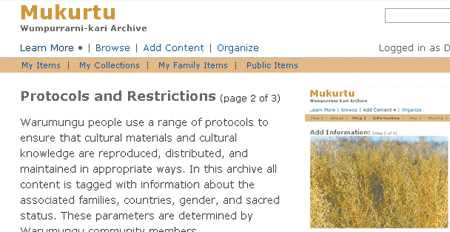

The BBC has a story about the Mukurtu Wumpurrarni-kari Archive, a digital photo archive developed by/for the Warumungu community in Australia’s Northern Territory. Because of cultural constraints, social status, gender and community background have been used to determine whether or not users can search for and view certain images:

It asks every person who logs in for their name, age, sex and standing within their community. This information then restricts what they can search for in the archive, offering a new take on DRM.

…

For example, men cannot view women’s rituals, and people from one community cannot view material from another without first seeking permission. Meanwhile images of the deceased cannot be viewed by their families.

It’s not completely clear whether it’s intended to help users perform self-censorship (i.e. they ‘know’ they ‘shouldn’t’ look at certain images, and the restrictions are helping them achieve that) or whether it’s intended to stop users seeing things they ‘shouldn’t’, even if they want to. I think it’s probably the former, since there’s nothing to stop someone putting in false details (but that does assume that the idea of putting in false details would be obvious to someone not experienced with computer login procedures; it may not).

While from my western point of view, this kind of social status-based discrimination DRM seems complete anathema – an entirely arbitrary restriction on knowledge dissemination – I can see that it offers something aside from our common understanding of censorship, and if that’s ‘appropriate’ in this context, then I guess it’s up to them. It’s certainly interesting.

Neverthless, imagining for a moment that there were a Warumungu community living in the EU, would DRM (or any other kind of access restriction) based on a) gender or b) social status not be illegal under European Human Rights legislation?

Disabling buttons

Disabling buttons

From Clientcopia:

Client: We don’t want the visitor to leave our site. Please leave the navigation buttons, but remove the links so that they don’t go anywhere if you click them.

It’s funny because the suggestion is such a crude way of implementing it, but it’s not actually that unlikely – a 2005 patent by Brian Shuster details a “program [that] interacts with the browser software to modify or control one or more of the browser functions, such that the user computer is further directed to a predesignated site or page… instead of accessing the site or page typically associated with the selected browser function” – and we’ve looked before at websites deliberately designed to break in certain browers and disabling right-click menus for arbitrary purposes.